JOMEC’s Reply to the Newswatch Report

Last year JOMEC conducted a BBC Trust funded impartiality review which examined the Corporation’s online and broadcast news coverage. One element of the research examined reporting of three highly charged issues: immigration, religion and the EU. Another strand looked at how the range of sources featured in BBC broadcast news compared with that of its competitors on ITV and Channel 4. For the broadcast study we examined some of the most influential mass audience bulletins including News at Ten, Breakfast News, Newsnight, the Today programme, Newsbeat, 5 Live Breakfast and Your Call. This was a very extensive study which examined a month’s coverage in both 2007 and 2012 producing a data set of 272 hours of news. A principal concern was to establish the range of sources and opinion in BBC news and whether news had moved from what the Bridcut Report had described as a ‘see-saw’ model of impartiality, based around a binary left-right distinction, to a more sophisticated ‘wagon wheel’ approach which recognised a changing social, political and technological environment. The results of the study indicated that this hadn’t occurred. Across both time periods Westminster sources tended to dominate coverage and their prevalence actually rose, from 49.4% of all source appearances in 2007, to 54.8% in 2012. In reporting of the EU, the dominance was even more pronounced with party-political sources accounting for 65% of source appearances in 2007 and 79.2% in 2012. One consequence of this was that reporting of the EU tended to be refracted through the prism of political infighting between Labour and the Conservatives, or within the Conservative Party itself. It also meant that the EU tended to be narrowly framed as a threat to British interests. We found that Eurosceptic views were heavily featured, whilst those arguing for the benefit of EU membership were less prominent. We also noted that there was an imbalance in the representation of Conservative and Labour voices, with Tory MPs getting significantly more airtime.

Initially the report and subsequent commentaries generated a minor media ripple, mostly positive. However last week a report compiled by Newswatch for the right of centre thinktank Civitas launched an attack on both our professional integrity and the robustness of our research methods. Our research was so flawed and compromised, they claimed, that ‘it wasn’t worth the paper it was written on’. However the Civitas report is so full of inaccuracies, as well as basic misunderstandings of the research process, that we feel compelled to respond. There isn’t room to go through all the errors in the report but we will look at a selection to illustrate the problems academics can face when researching areas of political controversy:

A central problem with the Civitas report is a basic misunderstanding of what academic research involves. On page 2 of their report the authors write that:

The clean bill of health on the EU component of the Report was delivered despite repeated warnings from many quarters, including the BBC’s own former director general, Mark Thompson, as well as political editor Nick Robinson, that the Corporation’s EU coverage was biased against so-called right-wing opinion. These followed earlier revelations from former senior BBC presenters and editors such as Peter Sissons, Rod Liddle and Robin Aitken, who said the same thing in different ways.

It is not the job of academics to give broadcasters ‘a clean bill of health’. We are not an accreditation body for the BBC and such statements do not appear in our report. Moreover, the research is critical of BBC coverage in a number of areas (notably the failure to move from ‘see-saw to wagon wheel’ and the continued dominance of political sources), which makes this characterisation particularly puzzling. The suggestion that as independent researchers we should consider the opinions of an arbitrarily selected group of BBC staff when conducting our research is highly irregular. Our findings are driven by the data rather than by anecdotal opinions.

The report also made a direct and very serious attack on our independence and integrity:

Newswatch has investigated the links between the Cardiff department and the BBC. There are two very strong connections which are particularly noteworthy. Richard Tait, a former BBC editor, who was subsequently appointed a BBC Governor and Trustee (2004-10) is now a Cardiff Professor of Journalism. Richard Sambrook, who was BBC Head of News until 2008 (and hence during one of the periods covered by the research) is the director of the Cardiff Centre of Journalism Media and Cultural Studies, and is a Professor of Journalism. The research project was commissioned by the Trustees directly from Professor Sambrook. It is also of note in this connection that Professor Karin Wahl- Jorgensen (Professor Sambrook’s deputy), who was director of the Prebble content analysis project, also worked recently for the European Commission on a report asking how the media were covering the idea of greater EU integration and why the UK was sceptical of that idea.

First, the report was not ‘commissioned by the Trustees directly from Professor Sambrook’, it was secured via a competitive tendering process in which a number of university departments and independent research consultancies bid for the research. This is standard practice for these kinds of projects. Second, Professor Sambrook is Director of the Centre for Journalism and Karin Wahl-Jorgensen is Director of Research in the School of Journalism, Media and Cultural Studies. Third, Professor Sambrook was Head of BBC UK News until 2004 and then Head of BBC Global (World Service) News until 2010. This means that he was not involved in the domestic news service during the periods of the research. Fourth, Professor Tait had nothing to do with the Trust’s decision to review impartiality or to commission the research. He left the BBC Trust in 2010 and the Trust’s decision was taken in 2012. Fifth, the claim that Professor Wahl-Jorgensen worked on an EU report ‘asking how the media were covering the idea of greater EU integration and why the UK was sceptical of that idea’ is incorrect. Professor Wahl-Jorgensen was the UK PI on a €4 million European Commission Framework 6 project on European Citizenship and the public sphere – this is high-profile and much-sought after funding for independent academic research.

Newswatch argue that the above links ‘seriously bring into question’ the independence of our research and ‘raise(s) questions about whether those from the Cardiff School of Journalism understand the need for rigour in the broadcast research process’. There are two obvious points to be made about these arguments. If research can be dismissed on the basis that the authors have some links to a particular organization or viewpoint then we would have to dismiss everything that the report’s authors write since, judging by their website, they write little else but polemical articles about BBC coverage of the EU. Secondly, our school is recognized as a world renowned centre for journalism research and training. Without recruiting former journalists, editors and executives from our leading broadcaster to staff our broadcast journalism and cutting edge computational journalism programmes we could not possibly compete with other institutions offering degrees in journalism.

The report also makes inaccurate statements about our methodology. It alleges that rather than generate a new sample, we recycled old research and employed a convenience sampling methodology:

However, rather than selecting a sample of BBC news coverage best suited to answering this question, the Cardiff researchers apparently recycled for the Prebble report a piece of research from 2007 which had originally been gathered for a survey of the BBC’s coverage of the UK’s nations and regions. It was thus a prime example of ‘convenience sampling’, defined in the academic literature on broadcast monitoring methodology as a sample which is not properly preconceived and directed, and instead is ‘more the product of expediency, chance and opportunity than of deliberate intent.

The origin of this completely inaccurate claim is hard to fathom. As the report makes very clear, the sample used was completely original and unrelated to the selection of programmes analysed for the 2007 report on the UK’s nations and regions. Secondly, we didn’t employ ‘convenience sampling’, but a purposive sampling approach that focused on a range of high profile BBC outlets. The report’s authors’ failure to understand what is a conventional content analysis approach led them to further spurious challenges. For instance the fact that we only included the 7am to 8:30am portion of weekday Today broadcasts was said to have introduced ‘constant errors’ into our methodology:

Two-way discussions between presenters and correspondents, an essential component of Today, would have been seriously under-emphasised as at least six of these segments are broadcast during the first hour of Today on weekday mornings, whereas the rest of the programme is more likely to carry interviews with invited guests. The regular ‘Yesterday in Parliament’ slot, (usually broadcast Tuesday to Friday at 6.45am, and at 7.20am on Saturdays), was also omitted entirely, thereby affecting the data for (and potentially the balance between) political speakers. And the religious affairs slot ‘Thought for the Day’ would have achieved more than twice its actual statistical prominence, because it is regularly positioned at the same time each morning, and would have been captured in all monitored programmes.

This part of the Today programme was purposively sampled both because it reaches the widest audience, and because we wanted to capture these elements. Our remit was to assess the range of voices in broadcasting, so selecting the part of the show where there would be a preponderance of interviews rather than two-ways was more germane to the research brief. One of our three areas of study was religion, so it was logical for us to capture the part of the programme with ‘Thought for the Day’. We narrowed the sample to weekdays because the audience is much larger on weekdays and the show exerts a greater agenda setting influence on the rest of the print and broadcast media.

The report also criticized our sample size which according to the report was much smaller than their 6,000 hour Newswatch sample. Furthermore we were alleged to have discarded 30% of our EU data:

Cardiff – seemingly through choice – also narrowed their potential sample even further. A key component of their research focused solely on the main EU stories during each survey period: the Lisbon Treaty in 2007 and negotiations on the EU Budget in 2012. Both themes accounted for roughly 70% of the total EU coverage. They therefore discarded from this area of analysis 30% of the material they had actually gathered.

The claim that we discarded 30% of our data is, again, simply inaccurate. As a part of our analysis of each of the three topics – religion, immigration and Britain’s relationship to Europe – we undertook a second level of analysis on the dominant stories in our sample – those stories, in other words, that the data suggested were more prominent – but we didn’t ‘discard’ any data. The report then makes another erroneous attack on our sampling methodology:

Cardiff’s sample was narrowed further by excluding any items that it did not consider to be specifically about the UK’s relationship with the European Union, including reports on EU leaders, the euro crisis or other countries’ relations with the EU. Cardiff do not explain their precise coding methodology, so it is impossible to know how many of the remaining nine speakers would have been excluded on account of these criteria.

The decision to focus on the UK’s relationship with the EU was the brief that was agreed with the BBC. We explained our coding methodology in detail both in the appendices and in the main body of the report. A key problem is that once again, the report’s authors demonstrate that they don’t understand content analysis methodology. Our unit of analysis was not the speaker, but the story (as is usually the case for media content analyses and clearly explained in our methodology, as well as throughout the report), and all directly and indirectly quoted sources – up to 16 for each story – were analysed for each story.

This misunderstanding of content analysis methodology unfortunately shapes how the Newswatch report discusses our research in the subsequent section: It is assumed that individual sources are selected or de-selected from the analysis on the basis of the topics they discuss. Instead, using standard content analysis practice, we analysed the range of sources within each story.

The very serious allegation that we misrepresented our dataset is also made. It is claimed that we ‘cherry-picked’ data from the report to erroneously argue that Conservative MPs received more airtime in BBC coverage:

But Professor Lewis was selective: he cherry-picked the most dramatic figures – the 3:1 and 4:1 statistics he quotes relate only to the most senior party figures in the survey sample and he excluded 95 MPs from his interpretation of the Cardiff 2012.

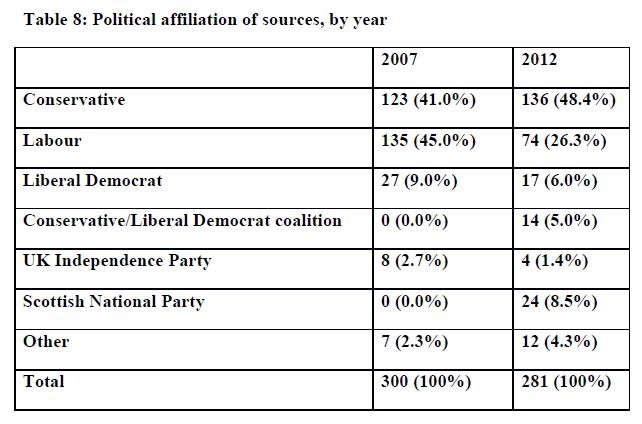

The implication here is that if the 95 MPs had been included a different picture would emerge of the balance between Conservative and Labour representatives. However the table on page 16 of our report, which includes the 95 MPs, clearly shows that this is not the case:

Ultimately the research community and wider public can make up their own mind about whose word to take on this matter, although we would urge people to read the full report which, given the sheer number of inaccuracies contained in their attack upon it, it is not at all clear that Newswatch have done. The affair serves as a cautionary tale illustrating how researchers can be the target of spurious attacks when trying to do conduct mainstream social science.